With a litany of tools and software floating around on the internet, checking your SEO process can seem a bit daunting. It should be noted that there is no single SEO took that can 100% accurately represent the search engine algorithms; therefore, any automated SEO took used can only provide basic information about how your site is performing. While I’m not discouraging the use of these SEO tools, I am simply presenting a list of options to manually check and track your SEO efforts. My hope is to show you the steps you can take to improve your site’s search engine visibly, usability, and positioning. Don’t miss out any any key aspect – these tips will allow you to put in place a process to systematically go through and check your site.

SEO Checker: How Many Indexed Pages Does Your Site Have

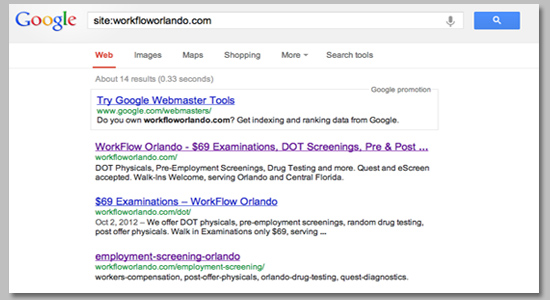

Do you want to know the best way to find out how many pages in your site Google is indexing? Ask Google! Simply visit Google and type into the search bar site:yoursitename.com. This is the first SEO checker tactic I recommend, use Google to gauge how many pages are ranking. For this example, I used Workfloworlando.com. This makes for a perfect example because the site was only built in October of 2012 and has not yet began any SEO efforts. The image below details what will display:

Right underneath the search bar it reads: “About 14 results.” This means that this domain has 14 different pages that can rank in Google. Try it on your site, you should want all of your content pages to rank. A common question my clients ask is, “Should all of my pages show up?” The answer to that question varies. In some cases, not every page of content will rank, for example if you have any pages that are password protected or require a login to access – these pages will not show up in the SERPS. Typically you want all of your pages to show up. This means the Google crawlers can access your site properly.

What If I A Substantial Amount Of Pages Are Not Being Indexed?

Uh oh! You’re ship has sunk, hang up the towel. Call it quits! Just kidding – this is quite a common problem with multiple solutions. Lets dive into the most common error that could cause this to happen.Robots.txt file is blocking pages

The Robots Exclusion Protocol (REP) is a group of web standards that regulate Web robot behavior and search engine indexing. The way its configured may prohibit access to the directory in which this URL is located; or it might restrict crawling to the URL specifically. Robots.txt file can list the directory or the files that need to be restricted from crawling. Carefully check that the robots file does not contain any important page entry that should be crawled and ranked in search engines. How do I do this? Go into the root folder of your website and check the robots.txt file. Make sure its placed in the top level directory.

Anything with User-agent: * Disallow means Google is not crawling. Change to: user-agent:* Allow

If this seems a bit too complicated – no worries! It never hurts to consult a professional to assist you in these matters. Properly configuring the crawl structure of a website can be rather complicated. This is just a simple suggestion to remedy a very common flaw.

SEO Checker: Manually Check Your On-Page Content

Another great do it yourself SEO Checker I recommend is check your on-page content! Oftentimes the content on the page to totally irrelevant to what you are trying to rank for, in other cases it is totally overstuffed with the keyword. From my experiences, its always one of the two polar opposites. I wrote a program to simulate Google’s indexing and retrival system, best known as LSI – Latent Semantic Indexing. Basically, it goes through and analyzes the semantic structure of words in the body and extracts the meaning on its own. For my clients, I run this program on each page and mathematically craft each article to rank higher. But there are still other basic guidelines and principles you can follow that will get you very close to a fully optimized page!My Suggestions:

- Make the 7th-10th word in each paragraph your main keyword

- Use an H2 that contains a variation of your main keyword

- Set your Title Tag to this format “Main Keyword for Page | Description of Page – Site Name”

- You can use your main keyword about 4-5 times per 500 words, and 8-10 variations of that keyword.

- No more than 2 keywords you want to rank for per page

- Spread the keywords evenly throughout the article

- Somewhere in the first 5 words of your last sentence, you should put your keyword

- Images help! Just make sure they are properly tagged.

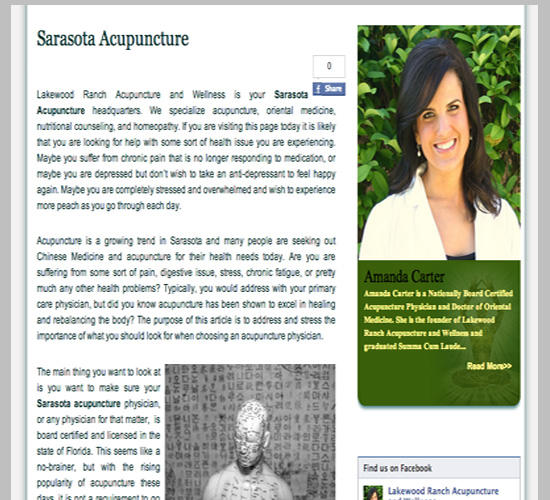

Below is an article of content from Lakewood Ranch Acupuncture and Wellness. This particular focus on this page she is trying to optimize for is “Sarasota Acupuncture.” The URL for the page is http://lakewoodranchacupuncturist.com/sarasota-acupuncture, note how the sought after term is right in the URL. Next you will see the HI is Sarasota Acupuncture. Take a look inside the paragraph content, the 8th word is Sarasota Acupuncture. The keyword is spread out though the page, evenly distributed to simulate natural content focus. Check back later this month, when we detail content structure in a more dedicated blog post.

0 comments:

Post a Comment